How we measure quality as a startup

Speed matters. Quality matters. Finding the right balance is key.

As a software startup, measuring and maintaining quality presents challenges as we try to balance ambitious velocity goals, an iterative approach to UX, and stakeholder feedback. Our product changes significantly from week to week, with new features or iterations deployed daily. So, how can we be sure we’re being mindful of the quality of our product as it evolves so quickly?

Ways to measure quality

A number of metrics can be used to measure overall quality, including but not limited to:

Bugs

Regressions: how many bugs we’re causing in previously implemented features

Story bugs: how many bugs are discovered during QA

Severity of bugs: minor visual quirks versus impact on critical functionality

Test coverage

Unit tests

End to end tests

While each metric has its own pros and cons, needless to say, no single measure can truly capture the overall quality of a product, and we have to look at multiple metrics for a more holistic view. After 6 months of development, we have some preliminary numbers to start assessing quality.

Decoding quality metrics

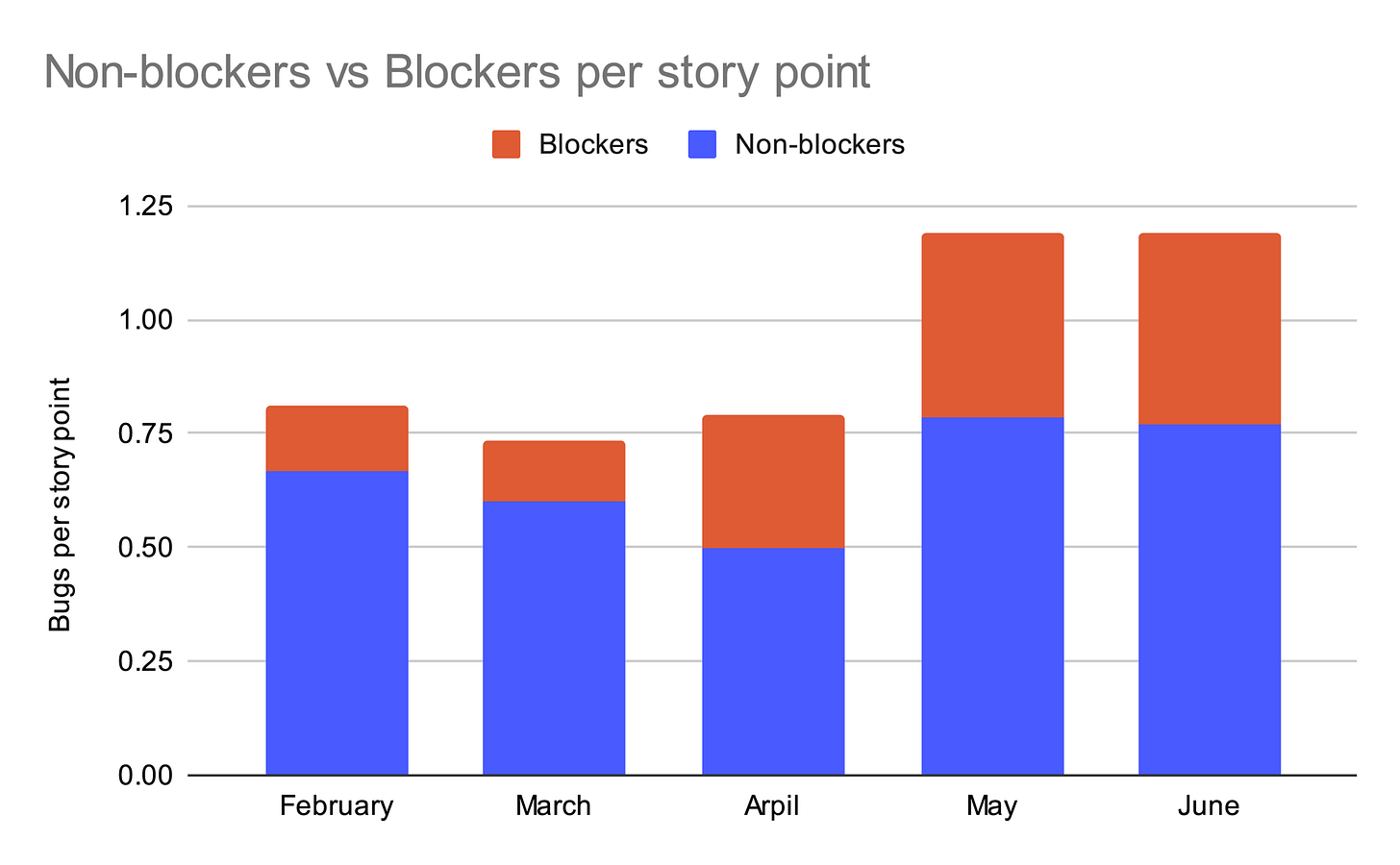

So far, we have trended towards more bugs and a higher proportion of blocker bugs – we consider a bug a “blocker” if it impacts functionality to a degree that the story cannot be closed. Yikes! There’s some context missing though, so let’s take a closer look.

Increasing complexity: As we progress further in our roadmap, we're encountering more complexity as we create more interesting features, leading to a potential for more bugs as we tackle harder problems.

Increasing velocity: As we find our rhythm as an engineering team, we’re starting to work more efficiently, covering more story points month by month.

Iteration, iteration, iteration: We believe it's important to iterate on features during development to optimize behavior and design. While this usually leads us in a better direction, it can result in more gray areas in requirements and edge cases.

At a glance, it looks like we’re producing stories with a higher number of more impactful defects, but the context is important here – we’re developing increasingly complex features, and we’re finding ways of doing it faster and faster.

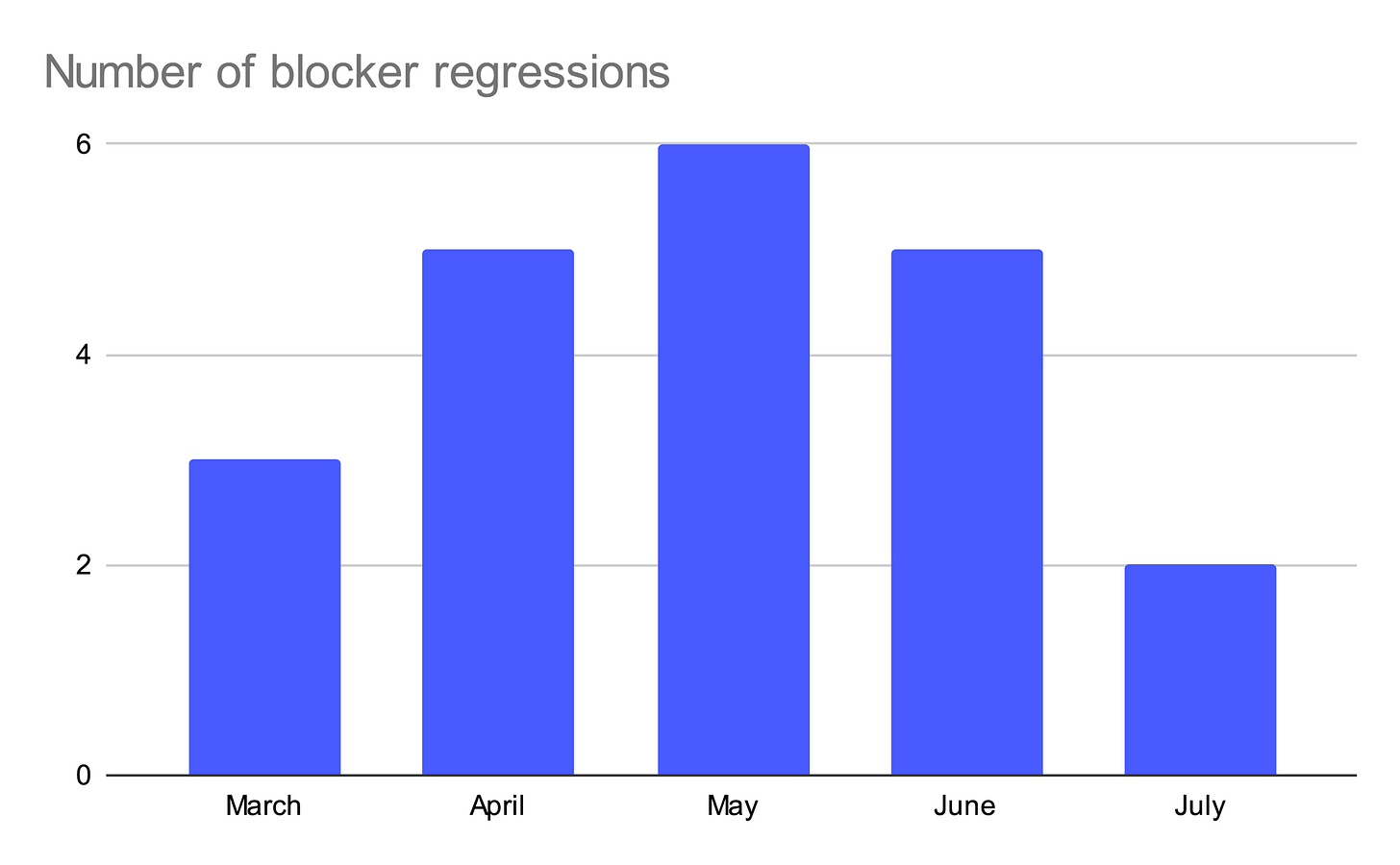

Regression of existing functionality is another piece of the puzzle. How much are we breaking by moving at break-neck speed? Surprisingly little! The number of blocking regressions has been blessedly few over the past 6 months, and though we have limited data, we do not see increasingly more regressions as we move forward.

Aside from bugs, test coverage is another indicator of overall quality. While we are currently generating unit test coverage reports, we are not enforcing any specific coverage on pull requests in favor of a faster development pace. Eventually, we will need to strike a balance between test coverage and velocity but we’re forgoing that conversation for now, until we start to see a decline in quality through other metrics.

In terms of end-to-end coverage, we’re automating roughly 50% of identified critical paths in the product. A major difficulty in maintaining end-to-end tests lies in keeping up with the pace of development and our velocity is continuing to increase. Another challenge lies in how dynamically our product is changing. The vast majority of test failures are simply a result of our features evolving and paths changing – not because of actual bugs.

Taking action on data

It’s important to remember that this data represents a snapshot in time, 6 months into a year-long effort to deliver an MVP to customers. It's a starting point from which to build on and provides us with a few pearls of wisdom.

Adapt strategies based on changing requirements. Though we have had a few major regressions, significant bugs discovered in QA are slowly increasing. If this trend continues, consider:

Increasing unit test coverage.

Slowing velocity slightly in favor of quality.

Focus on key paths. Shift from writing many individual end-to-end tests to fewer critical path end-to-end tests. These are faster to implement, making it easier to keep up with development so that there is greater overall coverage.

Test the big picture. Make end-to-end tests sufficiently abstract such that small changes do not generate false positive test failures, but are specific enough to catch bugs in critical functionality.

Continuous improvement. Continue to assess a wide range of metrics and be ready to pivot our approach if quality declines.

The Bottom Line

Measuring and maintaining quality in a rapidly evolving startup environment is undeniably challenging. Our strategy in testing comes down to a careful consideration of our specific circumstances – and those requirements are constantly changing. Staying adaptable in our approach and relying on data to make informed decisions will be crucial to ensuring that our product not only meets but exceeds the expectations of those it serves.